Relay Conversation with Yvonne Förster (YF), Jaime del Val (JdV), Flora Reznik (FR), Warrick Roseboom (WR), Joel Ryan (JRy) & Jonathan Reus (JRe)

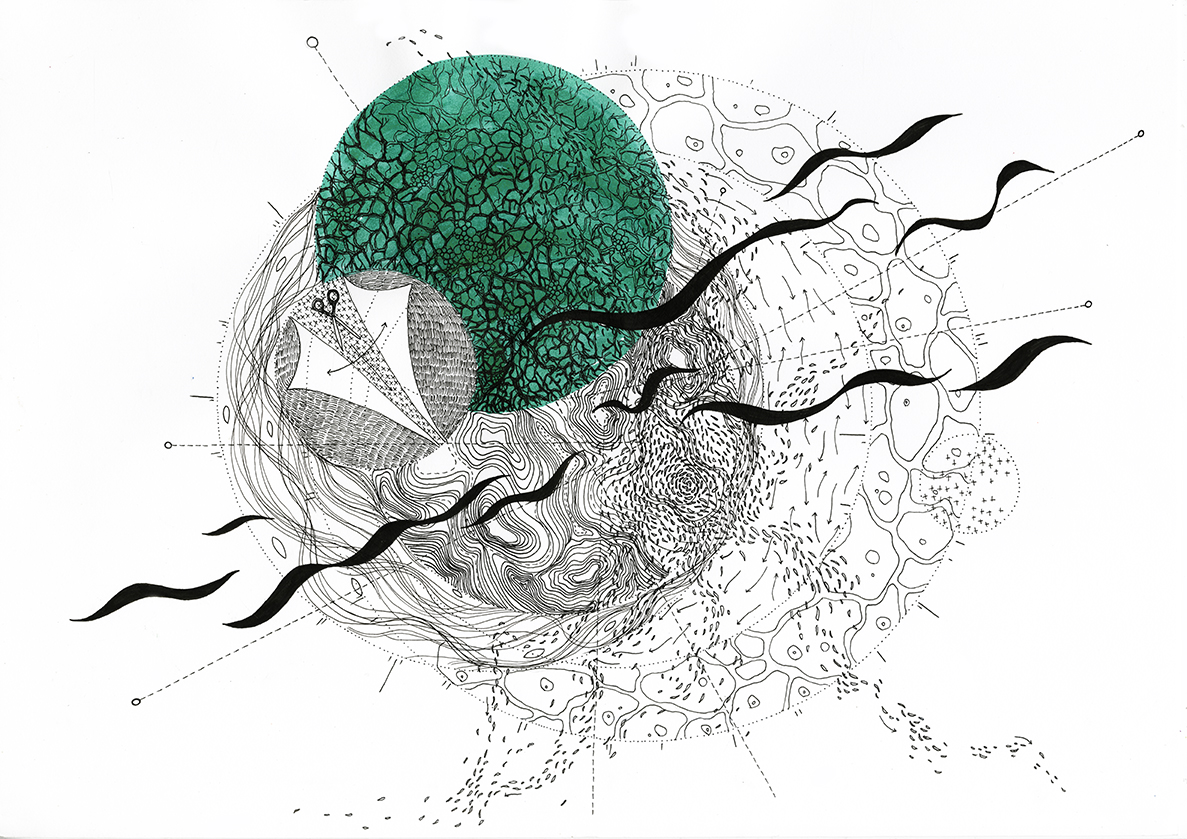

Illustration by: Sissel Marie Tonn

Editor’s note

The Reading Room is an event series produced in collaboration with Stroom Den Haag and the Instrument Inventors Initiative. Since 2015 we have been organizing this series with the intention of creating a platform for close-readings and discussion of theoretical texts among an ever-growing diverse community of artists, cultural practitioners and individuals interested in intellectual discourse. For every session we invite guest readers to share their knowledge and guide the community through texts and themes within their field of expertise. The Reading Room is made possible with financial support from Stroom Den Haag and the Creative Industries Fund NL.

As discussions in The Reading Room are so ephemeral, since 2017 we have been looking into ways of recording traces of these events. The ‘Relay Conversations’, are created in collaboration with the guest readers. These interviews are conducted as conversational relays between the three organizers; artists Jonathan Reus, Flora Reznik and Sissel Marie Tonn, and the guest readers.

The Reading Room #22 and #23, Time in the Age of Algorithms, took place on the 4th and 22nd of May with guest readers Yvonne Förster, Jaime del Val, Joel Ryan and Warrick Roseboom.

For the first session, to which this Relay Conversation part 1 refers, we read the Introduction to Erin Manning’s The Minor Gesture, the Introduction by Mark B. N. Hansen to Bernard Stiegler’s book Memory and Timothy Barker’s “Media In and Out of Time: Multi-temporality and the technical conditions of contemporaneity”.

For the second session, to which the Relay Conversation part 2 refers, we read “Effects of the Neuro-Turn: The Neural Network as a Paradigm for Human Self-Understanding” by Yvonne Förster, “Microtimes, Towards a Politics of Indeterminacy” by Jaime del Val and “Time without Clocks: Human time perception based on perceptual classification” by Warrick Roseboom, Zafeirios Fountas, Kyriacos Nikiforou, David Bhowmik, Murray Shanahan, & Anil K. Seth.

The temporal structures of reality have become far more complex since digital technology has become present in everyday life. Clearly the notion of ‘human’ time perception cannot exist without the times also hidden within the tools and technologies that co-construct our lived experiences. Lived ‘human time’ is never a given, is multiple and idiosyncratic; however, the current algorithmic age is one dominated by universalizing models – normalized databases, lossy information encodings, and algorithms optimized for efficiency – leaving our diverse and plastic minds to brave a potential Cartesian jungle.

This cluster of The Reading Room looks to better understand the transformation of ‘human time’ in the age of algorithms – taking a broad range of examples from the arts, media, artificial intelligence and neuroscience. Besides considering the mechanisms by which the experience of time is co-created through digital technologies, and the socio-political ramifications of this construction, we will discuss how certain algorithmic representations of time and models of the mind, such as those based on recent neural-network based topologies, could potentially liberate digital systems from their efficiency dogmas towards more diverse and fluid temporalities.

Relay Conversation part 1

FR- Yvonne, during our discussion in the Reading Room session, a participant (Darien Brito) asked that we clarified what we meant by “algorithmic time”. What ways do you see for unpacking this term? What part interests you and what is problematic about it?

YF: Algorithmic time in the current theoretical discourse is a very vague concept. It can cover everything from measured, or ‘objective’, time to the more rhythm-like and cyclical structures of computational processes. Generally, algorithmic time stands in for any kind of temporal structure that is not qualitative, has no felt intensity. The time we experience as fleeting, standing, slow or quick is subjective time, experienced time. In analytic philosophy this subjective time would be called A-Series, which is a time structured by a past, present and future, yet anchored in the here and now of the experience of a subject. Algorithmic time, on the other hand, is either time that plays out via computational rhythms, or is objectified time that is somehow measurable, and thus reducible to numbers. This can be associated with another concept from analytic philosophy, the B-Series of time, which is structured purely by before-after relations and has no tense, because the B-series notion of time is observable and depends on an outsider

who is situated in some external here and now. This objective, or technical time, however, constitutes to a huge extent what we experience via the computationally-mediated content that digital devices and infrastructures generate for us. In this sense, structures of time which are what we might call algorithmic preempt what we experience through their mediations. For example, a computational system might generate content through an algorithm that predicts what the user would find interesting, before the user searched actively for it.

It is necessary to understand and be aware of the temporalities of these preemptive processes in order to engage with technology in a productive and critical way. In my view philosophical theories that stop at the mere fact that experience is constituted by digital, connected technologies make the mistake of implicitly or explicitly turning technology into a black box. We need to open the black box, which is difficult, because we have to find ways to render these imperceptible processes sensible. This is where art comes into play: art can to a certain extent generate experiences that are otherwise non-accessible and thus enable us to take a critical or playful stance toward technologies that are designed to function as order-and-control systems.

There is an important debate in analytical philosophy about which of the time-series, A or B, is more fundamental, a question that famously started with John E. McTaggart and his article on the Unreality of Time (1908, Mind). Today this debate is still locked in a paradoxical situation. On the one hand, our perception is constituted (pre-empted) through temporal processes beyond our perception, such as computational processes governing smartphones and digital media (B-Series). On the other hand, we can experience qualitative changes in our perception of time without being able to get to the source of that change in perception. What remains unchanged though is the structure of subjective time-experience: past, present and future (A-series). This structure might become more complex and entangled with other processes and technologies, but still situatedness in a form of present is still the existential form of time-perception.

McTaggart’s conclusion was that the A-series is more fundamental to time, because only through the tenses we can account for change. However, the A-series also contains a contradiction, because the present cannot be qualified as present without using tensed sentences: The present is present now, has been future before and will be past very soon.

Put like this, every tense, be it past, present or future contains simultaneously all three tenses and is therefore contradictory in McTaggarts view (for the complete argument see his article Unreality of Time). Therefore he concluded, that time is unreal, since the perception of change cannot be accounted for without a contradiction.

My question is: why is it important to decide what kind of temporal ontology is the most fundamental? If we conclude that it’s algorithmic time, then we have to concede that past, present and future are illusions. This would mean we have no foundation from which one could build a critique of time-politics established through machinic structures. If only subjective temporal experience was taken to be real, the problem arises of how to give an account of diverse and changing experiences of time from an ‘objective’ standpoint. Given Timothy Barker’s idea of the con-temporality or multiplicity of times we could conclude that a choice between A- or B-series being more fundamental is not necessary. What needs to be understood is the ways in which algorithmic time and subjective time intertwine that accounts for intensities and qualities of experience.

Jaime, what points of entry do you think we could consider to begin to account for these intensities and qualities?

JdV: Alternative forms of time can be found in internal bodily rhythms and in perceptual rhythms. I don’t mean biological clocks, but much more diffuse temporalities of nearly-conscious experience that we can elaborate and build upon. My favourite example is proprioception, which can be mobilised in excess of the rational subject, as non-conscious resistance to equally non-conscious modes of time control.

Let us take the idea that time emerges from movement, that time is a ‘becoming measurable’ of movement that happens in particular circumstances where some aspects of the field become fixed in geometric relations that afford measurement. Then the movement of water, of bacterial bodies or the climate, like the internal proprioceptive movement of the body also create alternative forms of time. They create fields with peculiar spatio-temporal conditions (what I call microsingularities) and it makes little sense to measure them from the outside following a pre given and standardized cartesian timeline. The swarming movement of water currents, bacteria, clouds, nebulae, flocks or proprioception is perhaps irreducible to timelines they are not linear movements, thus these movements open up the question of non-linear times. These are also not simply reducible to ideas of circular or spherical time that reference cycles of nature.

We have yet to invent appropriate notions of time. Swarming time could be a new kind of notion of temporality to explore in these phenomena which may then open up new dimensions of experience for us, where infinite/indefinite quantum-like histories and times are swarming around in changing fields of elasticity, in multiple potential vectors across a diffuse intentional arc of movement.

YF: We likely do not even have to go so far as to think completely different concepts of time to consider these intensities and qualities, but rather we can do much by establishing practices and spaces to experience diverse forms of perception and cognition (like dance, new social architectures…). Modelling new forms in the abstract would be just as preemptive as the structures that the software of smart watches project us into. Experience should guide the development of new spaces and practices, not models.

Jon, how can we reflect on technology if we cancel out the experiential subject (not the strong cartesian one, a weak subjectivity)?

JRe: Let me try to paraphrase your question. What basis do we have to reflect on technology without an observer who contains some experiential frame,

Experience should guide the development of new spaces and practices, not models.

one whose sensation is not preempted by the technological world they are trying to observe? This seems to relate to the question from analytical philosophy you brought up…Can B-series time be real without an A-series observer?

You traced McTaggart’s argument to its conclusion, as I understand it: time is unreal because to reconcile the A- and B-series leads to a paradox. Perhaps the issue with reflection on preemptive technological processes is that this way of imagining technology is unreal. An alternative would be to consider time as something that’s co-constructed by the observer and the potential within the technological system to include past, present and future.

Just thinking of an example… the Turing Machine is a conceptual invention that gives a formal, generalized way of describing algorithms. Essentially the Turing Machine is a way of describing computational processes so that they become a measurable, and analyzable, thing. The Turing Machine itself is based on the process by which one would do a mathematical calculation on a piece of paper, sketching out intermediary values as you go through the process that leads to a solution. Alan Turing literally imagined this ‘machine’ as a person following a set of instructions.

If you were to really take the Turing Machine at face value, a person mindlessly following a method of note taking and stepwise instructions, it does seem very much like a sequential sort of time with only before and after relations. But the person is there, is doing and is fully part of the calculations, is working through and with and in the measurement system. What emerges from this interaction is technology. This idea of technology I think gives a lot more room for critical reflection while letting the experiential subject be a little less of a monolith.

Most modern software that we would say ‘preempts’ experience carries with it many potentials to be tensed through encounters with the world. For example, the Turing machine’s ‘sketchpad’, all software has the equivalent of this intermediary sketchpad in the form of its state, represented by specific pieces of data that change throughout the execution of the program. In a sense these pieces of data partly answer the question where am I at? ~ which could also be read when is now?. Every step is the logic of a complex program is preceded by a non-arbitrary and potentially non-linear sequence of previous steps. So the current step in the process also contains inside it some memory of where in the process it has been previously, as well as a possibility space of pathways into the future.

This one goes to anyone who would like to answer. Where do you identify the source of the concept of linear time? Why is it so problematic?

JdV: The source of linear time is in the invention of linear space, happening in Greece between 600 and 300 bc. i.e. in linear geometric organisations of movement and perception culminating in Euclidean geometry. It’s very problematic because it’s highly reductive, it has been the main mode of algorithmic time control and reduction until the emergence of information systems in the XXth century that enacted “real time” as the dissolution of time. Linear time reduces the diffuse or multidimensional character of movement/temporality to a single measurable timeline, it thus related to causality and mechanism.

YF: Linear time in itself is not problematic. It probably came to bear on reality with the onset of modernity and it’s continued tale of progress. At that point it turned into ideology and this is where things get problematic. Now we face a plurality of times, from individual biographies, daily routines, digital real time and hidden computational processes (see text by Tim Barker). The idea of progress in time (of the individual self as perfectible entity to society and cultural processes) covers this deep plurality in a way that can be problematic,

because it hides the preemptive forces of technology. It should be a goal to actively engage with digital technology in order to understand how our perception is shaped by it and uncover the creative and democratic potential in it.

FR: I would like to pause for a moment and go back to a pair of terms that have been used so far and that I think need some clarification: the pair objective/subjective. Is algorithmic, linear time even objective? Of course we would need to define what objective means first. If by objective we mean that it is something not ideal, not completely imaginary or subjected to individual feelings (like the feeling of “that time went fast while I was having fun”), that it has some material support and can affect our experience, I agree. But many times when we say that something is objective we mean that it is by nature like that, that it is a fact, and, as it came up in a conversation with the participants during the session, that “has been proven by science” (even though the comment aimed to point precisely the opposite: that time is actually not continuous and linear).

This is why I think the sort of archeology of the notion of algorithmic time that Jaime presented in the Reading Room session is so valuable. This archeology was a tracking back of the notion of algorithmic time paying special attention to the artifacts that were created over time to shape our experience of time. Archeology stands for a history of the construction of the dispositives that slowly constituted what now exists -in this case, as technologies of time. The focus is on the material aspects of this historical construct:

what exists now is a condensation of a slow and long history of “making time” in a certain way, and whether we have a representation of it or not, meaning whether we are conscious of it or not, indeed affects our experience. These are artifacts, a series of techniques and machines built by people in specific historical contexts that constitute the narrative of linearity that has grown strong over time. I think this historical account, even while acknowledging that any “history of” is a form of fiction inhabited with a certain political and ethical programe, is really helpful to understand the imbrication of that which affects us and us today.

To address your question, Jon, what I can add to what Jaime and Yvonne have already said, is this brilliant moment of the history of philosophy that in my opinion ended Modernity and opened the door to Contemporary Philosophy: Heidegger’s critique of what he calls “the vulgar notion of time”. To put it simple, Heidegger is observing the success of the Industrial revolution and how science has overthrown God. The model of the scientific method has expanded into a generalized, vulgar common sense: we treat reality how scientists treat their objects in the lab.

Science needs to operate with a notion of linear time, at least when it is experimental science: an experiment counts on the principle of cause-consequence (B series) in a time that is a continuum of causes-consequences. The repeatability of an experiment by a community of scientists aims to create an non-subjective approach, an “objective” scientist standing on the here and now.

But this repeatability of an experiment in different contexts, all practical scientists know, is a reduction: namely, of what makes a context specific. Any experiment in science operates under a ceteris paribus method: an experiment starts by specifying the conditions under which the experiment will work (for example, normal temperature and pressure of the environment), while excluding all other factors that may interfere.

Heidegger states that this expanded to a mode of thinking about reality or experiencing reality in general and it is no coincidence: it happens at a historical time when a driving force of society needs to have things “at hand”, within our horizon of expectations, under control. This generalized perspective or ideology considers Men as the one that can rule reality from a pure present, where even other men fall into the category of predictable objects. This, of course, is a very poor tool to cope with a reality that is in fact way more complex than a lab, but it doesn’t mean that this ideology doesn’t have very real effects up until today.

Yvonne, I know you work from a phenomenological perspective so perhaps you can go much deeper into this issue that I have just very schematically presented. In your text you mention ‘the notion of flesh’, described by Merleau-Ponty, as a possible path towards a deeper understanding of experience in relation with time. Could you expand on this notion and why you think the Husserlian notion of experience leads to problems?

(…) tracking back of the notion of algorithmic time paying special attention to the artifacts that were created over time to shape our experience of time.

YF: The notion of the flesh in Merleau-Ponty is meant to describe a generalized sensibility, that is not centered on human intentionality. Perception according to Merleau-Ponty is rooted in a deep relation of observer and the observed. The observer can be observed, touched, sensed just like a thing. Things also have a common ground with perceiving subjects.

They stand in perspectival relation to one another and the perceiver and thus fulfill the first condition to be potentially sentient, namely having a perspective that relates them to anything else in the environment. The world of perception in Merleau-Ponty is understood as a tight net of relationalities and potentialities. Sensing cannot be localized in the mind of the observer, rather it is dispersed in the environment. This tight net of relationalities is what Merleau-Ponty calls the flesh, a generalized sensibility. With regard to time this means that there is no pure present, the present is always infiltrated by past and future as a well as different relations can have different temporalities. Perception according to Merleau-Ponty is always situated (in a certain time, environment and limited grade of attention). Everything that escapes the view has the potential to come into view and thus to change the set of perceptual relations completely.

Perception takes place within an arc of intention, that is not caught in the here and now, but opens possibilities to proceed, come back, re-interpret and see anew. This dynamic view of perception can be a model to research human-machine relation with respect to experience of time. Experience and material, quality and measurement can be viewed as interchangeable dimensions that give rise to new configurations and situations, adding a playful perspective to the regime of everyday life.

Interesting more artistic examples can be found on Jaime’s project website metabody.eu. He uses for example small surveillance cameras attached to the body to project moving images of tiny body parts in large size to walls etc. These peculiar images of body-movement alienate standard body schemas as well as open up new technology-mediated creative movement experiences.

JRe: Jaime, During the Reading Room session you mentioned a “cartesian anxiety” and linked it to the birth of prediction technologies and to the “algoricene”. Could you explain this?

JdV: The Cartesian anxiety, or as N. Katherine Hayles put it in How We Became Posthuman “liberal subjectivity imperiled”, is the fear experienced by the subject that emerged with Renaissance perspective

(the fixed, disembodied, rational, autonomous, categorically split subject of humanism) when faced with an unpredictable world, once physics in the 20th Century has recognised that the world is not Newtonian or deterministic, that it is ontologically chaotic and unpredictable, a fear intensified by the World Wars and the atomic bomb. Cybernetics could be a response of the Cartesian subject to the anxiety generated by an unpredictable world, in the attempt to retain order, control, and itself as bounded entity.

During the Reading Room session I proposed to the participants that they tried to think of experiences that were not aligned with the clock time, and it was quite disturbing to notice that it was very hard to imagine such a thing. Is it an alternative to the algorithmic time even thinkable? Do we really live that aligned, or are there perhaps everyday experiences that are not aligned? Can you think of a way of understanding experience or perhaps certain specific experiences that are at the same time in and out of that algorithmic time?

JRe: I remember that. You asked if anyone could think of a temporal experience not experienced as clock time and nobody was really able to come up with something. I think it was probably too early for a question

like that, because we were still picking apart what kind of a thing ‘algorithmic time’ is. To then throw in the idea of ‘clock-time’, and conflating the two, seemed to throw the group for a loop.

Given the texts we read, and I’m especially thinking of Tim Barker’s text here, I think it would have beneficial not to have asked the question so generally, without mentioning the scales and processes of interest. Barker specifically describes two temporal situations that algorithms co-produce. The first being that algorithmic processes and their media infrastructures, such as search engines and social media networks, provide contact with the events of the world at nearly instantaneous temporal speeds. The second process is the one by which the technicity of the media draws people into its own logic and structures, which creates totally new types of temporal experience within which the events of the world play out.

‘Clock-time’ I understand as the type of experience that emerges from a very specific technicity, a co-creation of experience and method that is focused on measurement and synchronization. Prior to clocks, this technicity would emerge in a different way with phenomena that provided a semi-regular rate of change and could be observed by different individuals over distances. Solar, lunar and astronomical

time-keeping systems, for example, filled this technicity. What is different in algorithmic time? Well, the stars don’t accelerate at any rate we can perceive. There’s no overclocking, efficiency improvements or Moore’s law to the motions of the universe. So there is an absolute temporal/cyclical reference frame there that one could make an argument for being fundamental to life on earth. Is it like clock time? Yes, in its instrumentalization, and no, in its ontology.

Jaime, you’ve described some historic examples of what you referred to as ‘algorithmic’ techniques, such as the urban grid developed by Hippodamus of Miletus in the 5th century B.C., or the mechanical perspectival drawing machines of the Renaissance. Do you see any salient danger in the way these kinds of algorithmic approaches are propagating?

JdV: Algorithmic technology is becoming more opaque and centralised than ever, epitomized in Google’s or Facebook’s Big Data algorithms, which are not only opaquely designed by the corporations, influencing planetary scale processes of unprecedented dimensions and depth, but whose emergent nature can become unknowable even for the programmers. Hyper-algorithms, as hyperconnected, emergent algorithms with an all-encompassing will to control,

focus on preemption of emergence, futurity and potentiality, not just of the already known or categorised, while operating in microseconds that by far exceed the slow algorithm of rational consciousness.

Flora, what do you think of Stiegler’s approach (as presented in the introduction by Hansen) to the problem of memory aids and the opposition between mnemotechniques and mnemotechnologies?

FR: Towards the end of Hansen’s introduction there seems to be a gloomy panorama: we live in the aftermath, subjects are alienated from the technology they have created, leaving people helpless in hands of corporations, -only to jump into a hyper-optimistic turn in the last two pages where he states that the emergence of Internet brings the promise of the end of the dissociation between producers and consumers and hence “memory [can] once again become transindividual”. All this sounds very old-fashioned to me.

Neither tv and broadcasting were so evil nor will the Internet save us. It is not that techniques are “individual exteriorizations” as opposed to technologies on hands of corporations which can only be fought by means of comprehension of its internal logic by their users. I don’t want to say that I don’t see the dangers that Jaime has just mentioned as something to be considered;

There’s no overclocking, efficiency improvements or Moore’s law to the motions of the universe. So there is an absolute temporal/cyclical reference frame there that one could make an argument for being fundamental to life on earth. Is it like clock time? Yes, in its instrumentalization, and no, in its ontology.

I’m just pointing out that this dichotomous approach leaves us hopeless and without effective theoretical tools to think of actual alternatives.

The key to the problem I find in this text can be found in this excerpt: “To the excent that participation in these new societies, in this new form of capitalism, takes place through machinic interfaces beyond the comprehension of participants, the gain in knowledge is exclusively on the side of producers”. Here the term “participation” immediately made me think of our discussion in a previous cluster of the Reading Room: “Technologies of survival, Postcolonial Perspectives on Computation”, where our guest Nishant Shah gave a refreshing postcolonial take on the problem of participation. The colonial model tells the individuals what they have to learn, and if they fail to know this or that, their way to emancipation is closed, they will never participate in the society of knowledge. But the experiences of the community that Nishant evaluates in his text teach us that there are mis-uses, such as piracy for example, which can generate alternative knowledges and communitarian emergences that can even contribute to building new infrastructure that can encourage social development in unexpected directions.

I was surprised Hansen uses Derrida as almost his main reference, but never gets to the point of dissemination. Dissemination is the nature of all of technology, it is the intrinsic capacity of things to be misused, to generate practices that are unexpected and creative. Furthermore, since we are immersed in a phenomenological discussion, we must say that since Husserl the object is not opposed to the subject and therefore we cannot talk about alienation. A weaker notion of subject is actually a great theoretical thread to start thinking politics differently.

JRe: Jaime, in Erin Manning’s text she presents the figure of the neurodiverse. How is this useful to think a different notion of subjectivity? How does this relate to our topic of time and algorithms? How can we mobilize the nonconscious cognition in favor of plasticity (vs flexibility)?

JdV: I think subjectivity is a limited notion inherited from perspective, that presupposes a somewhat bounded inside. We need to shift to a notion of ecologies and look into the degrees of indeterminacy/openness, or of alignment/closure of the ecologies we are always already part of. Neurodiversity gives a chance to consider ways of relating to others and the environment that don’t take

the radical split of perspective and rationalism for granted. What we need is to see how to generate sustainable ecologies across neurodiverse modes of experience, ecologies that are continually open in their perceptual-cognitive ratios, plastic ecologies, as opposed to the flexible adaptive ecologies of hypercontrol society where we have to constantly re-attune to the affordances of smart environments where algorithms redirect our attention continually.

The neurodiverse seems to be a model to think of collective subjectivities as well. Jon, since you have worked on the Undercommons in a previous Reading Room session, and this figure reappears in Manning’s text, could you reflect on how this mode of collective subjectivity seems to escape the logic of the cartesian anxiety? Is there room for “political urgency” in digital, hyper-scheduled time? What is the potential you see in digital time?

JRe: This might actually be another approach to the technicity of measuring and synchronization. How do we be together as a whole body, without measure, messy and frightened. In The Reading Room #16 we read chapter 6 from The Undercommons, entitled ‘Fantasy in the Hold’.

This chapter introduces a few concepts relevant to our discussion. One is a concept of ‘logistics’, which The Undercommons treats in a very broad way to encompass orchestrated human activities of transport and movement. There’s already a strong link there to what we’ve been talking about. Logistics of this type prioritizes efficiency, repeatability and inter(intra)operability ~ in Moten and Harney’s poetically charged writing, which connects logistics to the Atlantic slave trade, these priorities come at the expense of the consideration of human lives, and in current times does so through highly complex algorithmic systems that attempt to predict and preempt problems, redirecting shipping lines or airplane flight paths in near realtime when new information enters the system. The Undercommons asserts that the modern goal of logistics is to dispense with the subject altogether. Which makes sense as much as any other massive-scale human effort across space and time demands sophisticated systems of inscription, operation and protocol, if only because human memory is too limited and subjective experience too much of a risk. A logistical system, just as an algorithm, must result in a solution no matter who the operator. However there is also a uniquely contemporary anxiety of logistics

founded in survival. When one realizes how much the global world populace’s existence depends on these logistical systems to be efficient and adaptable at extra-human scales and speeds, and to become increasingly so each year.

What are the conditions of being without measure? Chapter 6 of the Undercommons introduces another concept in its final section, hapticality ~ a kind of feeling together that is not collective but something else that is outside description but is felt if you’re in it. Here’s a passage from that chapter which describes it:

“Previously … feeling was mine or it was ours. But in the hold, in the undercommons of a new feel, another kind of feeling became common. This form of feeling was not collective, not given to decision, not adhering or reattaching to settlement, nation, state, territory or historical story; nor was it repossessed by the group, which could not now feel as one, reunified in time and space. No … he is asking about something else. He is asking about a way of feeling through others, a feel for feeling others feeling you. This is modernity’s insurgent feel, its inherited caress, its skin talk, tongue touch, breath speech, hand laugh. It is the feel that no individual can stand, and no state abide. It is the feel we might call hapticality.”

Moten and Harney relate this feeling to the experience of being a being in shipping, as a slave in the hold of a ship on the Atlantic. Being together outside of one’s own volition, without any space-time frame of reference. Could this be one way of describing the unbearable anxiety of immeasurability?

In relation with the notion of The Undercommons as an ever moving entity, never fixed in one given site, is movement interchangeable with the concept of change? Which is prior?

JdV: Movement is prior to change since change implies something that changes, at least in our current understanding. Radical or pure becoming is instead not the becoming of something but of movement.

Relay Conversation (part 2)

FR: Dear Warrick, could you roughly explain how the artificial neural system that you and your team built works? Was there a primal hypothesis that led to the construction? Did you confirm that hypothesis or not? What new knowledge did you get from your experiments? And also: What motivated you to come up with such a thing? Is the machine imitating human perception or is it a model to understand how humans perceive?

WR: A lot to unpack in the question… I will start from the end: my job was to build a model of human-like time perception with the goal of using it in a humanoid robot. I guess the basic idea there would be that if the robot has a better idea of how the human is experiencing the passage of time, then it can more naturally interact – imagine you are involved in a collaborative act with the robot, such as preparing a meal (this is the core demonstration scenario in the project), you might make a natural language request of the robot to bring you some ingredients in a few minutes. The robot could take this literally and bring the ingredients in 3 minutes, or it could understand based on the content of your experience when you would think that period of time might have elapsed.

So that was the goal. But foremost, I am a researcher on human perception, so my goal is mainly about building models that reproduce the widest variety of human behavior, with biologically reasonable foundations, regardless of their applicability.

When starting the project, it was clear that human time perception is most characterised by its deviations from clock time – we have lots of intuitive cases that describe this: “time flies”… etc. These situations are all related to the content of experience. So it seemed silly to me to aspire to have a model of time perception that starts from an assumption of regularity (like the traditional ‘internal clock’ approach) and then has to deviate in many ways in order to accommodate what is intuitively true – that experience is content dependent. An alternative would be to build a model based on the dynamics (change) of content of experience, from the outside (simply the change in the world). But people had already tried this to some degree and it obviously ignores that there is some level of interpretation that happens to produce perceptual experience. So we needed a proxy for the kind of processing that is the foundation of perceptual experience when humans are faced with dynamic input. For this we used a deep convolutional image classification network.

The model is based on tracking the amount of change that occurs in the classification network (acting as proxy for human perceptual classification) based on the input to the network (video) during a to-be-timed epoch. Change is given by the difference in activation pattern across the network between samples. If the difference exceeds a threshold, it is determined that a salient change had occurred -that the world now is meaningfully different from what it was. The model just accumulates how many of these changes occur in an epoch and this gives a kind of arbitrary unit of time – less of it would feel like a shorter interval had elapsed and more like a longer interval. This is equivalent to a basic sense of time. We then need the model to output this time sense into standard units so it can be compared with other things, like clock time and human estimation. The conversion of this arbitrary unit into a standard label is a regression problem (mapping one set of values onto another set as closely as possible), so we used a simple regression technique called support vector regression (we tried many, it isn’t really that important which one we used except that it works). This then provides model estimates in seconds to be compared with other estimations.

Imagine you are involved in a collaborative act with the robot, such as preparing a meal…

This is the background for building the model and then the hypothesis is simply an array of questions about how well it captures these deviations in human time perception. So we tested the model by inputting the same videos as we showed to human participants and had both give estimates in seconds of how long the videos were. Both the human and the model estimates differed by the type of scene they observed and in general the model estimates matched human estimates well across these scenes. So we would say that the model is doing a good job and, on the basis of its construction, is a reasonable approximation of how humans might perceive time. This needs further validation, using neuroimaging and other experiments, and we are currently engaged in doing so.

Yvonne, in relation to a conversation we had after everyone else had left – as described in your text, there is a bias in popular culture towards seeing artificial intelligence as powerful, other, and eventually superseding the human realm in some way. This contrasts with the way that many people using basic versions of current AI (such as in our model) see things, which is as very dumb, limited machines that can sometimes be used to make somewhat difficult problems easier – they are just a hammer to a nail for the problem of image classification,

in our case. It is interesting because the extent to which these dumb AIs are in people’s lives now is huge – many people have interacted with Siri or Alexa or other personal assistant systems. These systems are amazing in that they can do things like natural language recognition very well, but are enormously frustrating to interact with. They have extremely poor contextual knowledge and interacting with them shows just how unintelligent they are. What is the source of this gap between aspiration and casting AI as god-like and the reality of what we have? Is it anything more than trying to salvage our dualist intuitions from the scrap-heap via ‘Singularity’?

YF: It is true that there is a huge discrepancy between the actual existing artificial intelligence, the things machines can accomplish in comparison to what we imagine them to be able to do in the future. Science fiction as well as popular science and tech-celebrities like Elon Musk or Ray Kurzweil all make use of our imaginations of future technologies running wild.

The ambivalence I see at the moment is that most of the people are not aware of the extent to which our daily lives make use of artificial intelligence in the sense of information technologies driven by

self-learning algorithms, the countless connected devices and appliances in households and public spaces. On the other hand they overestimate the actual intelligence of digital technologies by far. The reasons for this tendency are manifold.

One of them surely is a certain scepticism toward new technologies, changing business cultures and emergent ways of communication. More important in my view is a particular “Zeitgeist”, the contemporary conditio humana if you will. This is the way humans see themselves and think of themselves in relation to machines. Why would we be afraid of being superseded by machines that can’t even digest irony? This is because people tend to limit their idea of human intelligence to measurable, predictable and objectifiable abilities. Basically what is measurable and can be put in use to maximize output speed is associated with intelligence: like analysing huge amounts of information in a short amount of time or memorizing facts. In this regard computers have outrun humans already for some time.

In my view this idea of human intelligence is far too limited to a logic of production and economic success. One can observe this already in the vast and unfortunate employment of the standards dictated by the Pisa study to school education.

What is measured there will be performed much quicker and easier by machines. Intelligence seen from this perspective will easily give the impression that humans might be doomed to become an outdated species.

Instead it would make sense to focus on creativity, empathy and the potential of human-machine-relations beyond efficacy and explore new ways of interaction as for example the painter Liat Grayver does by collaborating with AI driven robots in order to understand and open up new horizons of the art of painting. She also questions the strong notion of the artist by understanding robots as collaborators rather than means to an end.

With regard to time one could say that we live in a strange simultaneity of a present that is underestimated in its novelty while the presence of a precarious future is felt as if it was already reality. This problem arises because we subject the human image to a norm of efficacy that is barely human and first and foremost not productive for creating positive forms of interaction in diversified life-worlds, that house all kinds of intelligences and abilities.

YF: Warrick, what consequence you think your research can have in medical practices, for example how people with depression or other psychological conditions are treated. After all, not everything seems to be in the head. Can we make use of the insight of the importance of embodied experience in the way we treat and speak about certain conditions?

WR: There is a great pressure for basic research to be directed towards application. When it involves humans, more often than not the pressure is towards medical applications. I could make a story about how this work might inform the cases you are talking about, but I think it would be a stretch at this point. Certainly, moving away from ‘clock models’ to try to retrieve something more interesting about how human experience is pieced together (and how this at the same time is time as well as informing different ways of viewing time in experience) will hopefully drag some people away from the more simplistic way of viewing how humans interact with their environment to generate the content of experience.

This certainly comes with the constraint of being embodied, but it is not clear to me yet whether the embodiment aspect is anything more than constraint of the problem.

I recall there was some discomfort (particularly from Flora, I vaguely remember, but I ask this to anyone who would like to answer) as I was going through explaining my work with the idea of trying to work within a naive physicalist framework as relates to time. The idea that comparing to a clock or some metric at all, as we do to both utilise computer-based models and in comparing human versus model generated estimates, is a problematic starting point. Joel also mentioned that time from the point of view of modern physics (as with all things in modern physics) is no longer consistent with the naive physicalist point of view (and hasn’t been for at least a century now).

Am I misremembering this point or would anyone like to expand on why they feel this discomfort? Or if they feel there is an irreconcilability somewhere here.

We live in a strange simultaneity of a present that is underestimated in its novelty while the presence of a precarious future is felt as if it was already reality.

FR: Dear Warrick, thanks for your question. It is always confronting to meet researchers who are working from a different discipline. It would be very easy to just talk with people that speak your language. My discomfort had a lot to do with me having a really hard time understanding your language. First of all, from what I managed to grasp, I think that your research that points at that experience of time is based on content is valuable, and I appreciate the effort to discredit a model that makes us more similar to robots. But I must admit that I do have a certain general skepticism towards the use of computational methods to articulate models of the human mind or experience. One main issue I see is precisely what you were tackling just now: the pressure to make applications in medicine, for example. This pressure is political and economical, and it requires political thought to deal with it.

I just feel that there is a big risk of science taking over and rendering philosophy virtually useless, which was indeed the project of the anti-metaphysical movement of the early philosophy of science. But philosophy is much more than an auxiliary tool (which ultimately could be discarded all together): philosophy is the discipline that can maintain criticality and produce speculation towards creating models that are not contrastable, that are perhaps

utopian and absolutely counter common sense. Philosophy doesn’t create ex-nihilo: it is always a conversation with the history of the philosophical tradition, and there is a necessity -a historical and political necessity, in that process. Following on what Yvonne said, there is much about experience that is not computable.

Affect is by definition not computable. I’d like to think of experience as that excess (if I have to go into this it will take forever, but in short, I’d like to think of experience as something that is exceeded by the other of what it can conceive, which is beyond its horizon of expectations. And this concerns our notion of time as well and the accent we put in what is unexpected, what can surprise us, rather than on what we can quantify and manipulate). You explicitly mentioned that you are aware of that, but still focus on working with what can be translated or modelled computationally. It is just a matter of accents, of where to put the attention.

I am sure that we all have thought about how science, all our technological tools and fundamentation systems are biased and that they have come to be considered valuable “tools” through historical processes that have a lot to do with power. But I never stop to be amazed and also very much frustrated when talking with some friends and bumping into a total trust towards science, a certain “common sense”

that has indeed replaced God with Science (or “or human instincts”, “nature” and so on) as touchstones of truth. And I see this as a great danger. So I choose what kind of discourse I bring to the front. It’s like in politics: sometimes one agrees with parts of what one party is proposing and not with other parts, but ultimately one needs to decide where one stands and whose game one is playing (liberals for dialogue in the “public sphere” will disagree with this take on politics, but I am not one of those).

Finally, I don’t think there is one naive physicalist notion of time – against a more advanced and true physics: newtonian mechanics versus quantum mechanics. This very linearity is what I would love to discuss when thinking about time. Perhaps there are overlapping various modes of experience based on different notions of time according to what activities we are carrying out and what goals we are pursuing.

Joel, you asked a question during the RR session that was left unattended, and I’d love to go back to it. If I remember correctly you asked if we (artists) “submit to synchronizing to this dominant mechanical time”? What did you have in mind when asking that? Could you explain what you mean by mechanical time? And what alternatives do you see to this?

JRy: I was wondering where is subjective, user-dependent time authentic and valid. Time is now defined by its measure rather than its experience. Surveyors and astronomers don’t confuse space with their instruments, tape measures and theodolites, but most of us confuse time with clocks. The definitiveness of number and the objectivity of clocks allow us all to share time without prejudice: we catch a plane and get to work “on time”. But does the singular human experience of time remain valid?

There is no evidence for one universal all embracing time. Physics embraces a manifold character for time. Time runs at different rates from different points of view, different locations and paths in gravitational space. This simultaneous overlapping infinity of time perspectives is mind boggling to imagine (cf. differential geometry). The man on the street (especially those in monotheist traditions) has yet to take this in, making every discussion belabor the idea of “objective time”.

There are two issues here. One philosophical about the true nature of time. The other is about how we share time.The problem, if it is a problem, of the subjectivity of time perception seems to go away when we are singing and dancing together. We know we could collide but mostly we don’t. Music is a place where we join to imagine and enact temporal experiences of endless variety from fretted to figured.

This time is central to human perception and existence. Do we submit to the clock to make music, to dance, sport, cinema, to ride a bike, play ball or play of any kind? These are the most quantitatively accurate and refined moments of our perception. Not lab time but my time and your time. I can sing a little and know how bad I am, but I can listen to another great singer like Omm Kulturm and know how wondrous is the time she makes.

On the other hand, the time of music is direct personal experience. You don’t have to ask anyone if is this fast or slow, awkward or graceful. Of course there are caveats, we have been warned of the dangers of music. It is the most familiar scene of our unmediated judgement. Like King David dancing half naked around the ark of the covenant to the disgust of the royalty. Music is where we play with our relation to time as we perceive it, not merely as number or abstractions.

So in music we prefer to make time. No one wants to hear us chat about it, they want to use their ears to join the flow, feel beats, tune into arcs of passing time. Clocks ignore us, count with or without us. Instead of waiting for the current “measured” (but otherwise unknown) period to pass we can join the dance.

JRe: Joel, we share very similar artistic values when in comes to feeling out what it means to be a musician, and more specifically a player, intervening through computing technologies.

When I came to Amsterdam ten years ago on a research trip to Steim, I came to see you as a pioneer for your work using real-time computer music systems, practically as soon as “real-time” performance with computing machines was even a technical possibility.

Can you tell a bit more about your own experiences as a musician relating to the timing of computation?

JRy: Really when I started out programming music I was seriously disappointed with the time that came out of the software I wrote. This was only solved when I accepted that using my hands to compensate for awkward computer timing was a good thing, not “cheating”, not a failure of programming.

In other words I always knew when things should happen when the computer didn’t. This is not to say that machines can’t be better at time but simply that it’s far easier to play time than to describe it. The medium of logic and ordinary language are not suited to the description of fluid expressive time.

JRe: There seems to be something ontologically problematic about describing flow using any logical or system of natural language. I think it has to do with the categorical nature, categories and universalisms that are so basic to mathematical, computational descriptions not doing right by musical gestures.

Music is a place where we join to imagine and enact temporal experiences of endless variety from fretted to figured.

Or at least, developing an intuition for description as sophisticated as the intuition of our hearing is a tall order, although certainly not impossible.

JRy: The awkwardness and inadequacy of descriptive approaches to time in music is not a popular topic at universities because language and description are the game there. The refinements of music apparently all belong to the text, the mistakes belong to musicians. It is rather the other way around.

In conservatories composition is often taken to “be” a linguistic act: Mais où est l’écriture, as Pierre Boulez used to say back in the lab. I sympathize with his yearning for lost times, but music is not lost just moving on with a new form of notation. In computer science wherever you are, the point is not actually to make music but to get code to make music. Subtlety is, I would argue, necessarily lost.

JRe: Or even, subtlety is pushed outside of the problem domain. You make the tool and then it’s the musician’s job to think about subtlety.

JRy: At MIT I got a demo of synthetic blues, at IRCAM virtual castrati. If this “works”, what is the point? Certainly not an ironic critique of AI. If the point is music making and mechanical time doesn’t make it, there are other options.

You can say that musicians make time or that musicians color time, I prefer the former. I spoke of submitting to mechanical time because there is a choice. I know this because music is a place where direct experience is always in the foreground.

YF: I like the expression of making time. I think that is precisely the point in my idea of what time is about. Most certainly there is no unified, homogeneous cosmic time. Time is always becoming. Merleau-Ponty would say that it springs from the contact of subject and the world just like a fountain springs from its source. In that sense we might even speak of a direct experience, even if this is unintelligible from an epistemological point of view. When time as experience originates from being engaged within an environment, then there is something very direct about that. The experience of rhythm for example is characterized through resonance and resonance establishes a connection, in which subject and object resonate. Rhythm thus has a unifying material power that doesn’t distinguish between instrument and musician, sender and receiver or subject and object for that matter. It is much more like a generalized sensibility, the flesh of time, as Merleau-Ponty puts it. The flesh in Merleau-Ponty’s writing is a concept that makes palpable how categorial differences like subject and object emerge from a common ground,

how they come to be differentiated because they are simply variation in perspective. In that sense time is not intentionally being made, but it emerges from concrete relations and thus can also be generated through changes in perspective, something that art always does.

FR: What does ‘playing “makes” time’ mean to you, Jon?

JRe: For me it’s about music as an experimental medium. Not meaning experimental as a genre, but really in the laboratory sense of probing at the stuff of reality. When you make music you’re always experimenting with different kinds of time, half-time, double-time – does this time fit inside this time, how far can I stretch this out?

“Music is a hidden arithmetic exercise of the soul, which does not know that it is counting”, was Gottfried Leibniz. I’m counting without knowing, and you’re listening to me count and also counting. We create an expanding manifold while walking across it, experiencing those timings and durations, and others can plug in or out of it.

JRy: Much of my favorite contemporary music is made by people who improvise, all kinds, all genres. These performers make complex figurations of time that defy description yet are perceived and enjoyed

by listeners. To me this implies the intuitive knowledge of timing I call “knowing when”. This knowledge is not the habitus of specialists, it cannot be -how otherwise account for the fact that amateurs, mere listeners, get music? In conservatories this is too often described as matter of “reflexes”, the previous century’s knee jerk image of the richness of our neural complexity and 20th Century modernist deprecation of musicianship.

Bios

Prof. Dr. Yvonne Förster is currently research fellow at the Institute for Advanced Studies in Cultural Sciences at University of Konstanz, Germany. Until 2016 she held a position as junior professor of philosophy of culture and art at Leuphana University Lüneburg, dept. of Philosophy and Art and was a senior research fellow at the Institute for Advanced Studies MECS (Media Cultures of Computer Simulation) at Leuphana University in 2017. She did her PhD in philosophy at Friedrich-Schiller-University Jena in 2009 and was appointed visiting professor of aesthetics at the Bauhaus University Weimar in 2009/10. Her research focuses on posthumanism, theories of embodiment, phenomenology, aesthetics and fashion theory.

Warrick Roseboom is a computational neuroscientist and cognitive science researcher focusing on human perception. His research is most generally interested in how usually coherent perception can result from varying and sometimes incoherent sensory input, with a particular focus on human temporal perception. In his research experiments he uses a combination of human behavioural, computational modelling, neuroimaging, and artificial systems approaches. Roseboom completed a PhD under the supervision of Dr. Derek Arnold in the Perception Lab at the School of Psychology, The University of Queensland, Australia and earlier worked in the Synaptic Plasticity Lab at the Queensland Brain Institute. He is currently leader of the Time Perception Group at the Sackler Centre for Consciousness Science, University of Sussex, UK and is part of the six-partner EU project Timestorm, which aims to equip artificial systems with human-like temporal cognition.

Joel Ryan is a composer, inventor and scientist. He is a pioneer in the design of musical instruments based on real-time digital signal processing. He currently works at STEIM in Amsterdam, tours with the Frankfurt Ballet and is a teacher at the Institute of Sonology in The Hague. Starting from a scientific rather than a musical education, he moved into music by degrees from physics via philosophy. Ryan seeks to bring concreteness to digital electronic media through the intelligent touch of the performer. Taking time as an epitome of music making, Ryan’s work looks to zoom in on the innate capacity for perceiving its quantity, outside of language and analytic thinking.

Jaime del Val is meta-media artist, philosopher, activist, promoter of theMetabody Forum, project and Institute, and the non profit organisation Reverso. Since 2000 Jaime develops transdisciplinary projects in the transvergence of arts (dance, performance, architecture, visual and media arts, music), technologies, critical theory and activism proposing redefinitions of embodiment, perception and public space which have been presented with over 100 performances and installations in over 50 cities of 25 countries. The recent evolution of these projects led to the European project Metabody with a network of 38 partners from 16 countries where Jaime has organised over 33 international Forums and coordinated over 60 research projects. Jaime’s philosophical work has been published in over 30 essays in journals such as Performance Research – Routledge, Leonardo and others. Jaime has edited the Journals Reverso and Metabody, is member of the organizing committee of the Beyond Humanism Conference series, and has given over 100 lectures in Universities like U.C Berkeley, Stanford, MIT MediaLab, Duke University, Yale, Cambridge and others. As an activist Jaime has been active for the last 20 years in queer, environmental and Occupy movements and now promotes ontohacking politics in the Algoricene.

Jonathan Reus is an American musician, researcher and curator whose work blends machine aesthetics with free improvisation. His broader research is into instruments and instrumentations, and their potential to bring new insight into knowing the world. Jonathan is associate lecturer of Computing and Coded Culture at the Institute for Culture and Aesthetics of Digital Media in Leuphana University, Lüneburg, where he has created teaching methods for hybrid coursework blending science, mathematics and cultural studies. He is also a lecturer in performative sound art at the ArtEZ academy of art in Arnhem.

Flora Reznik is an Argentinian artist based in The Netherlands. She studied in Universidad del Cine (FUC), obtained a diploma in Philosophy (University of Buenos Aires), while she worked as a video editor in film and TV, and co-funded the contemporary arts magazine “CIA”. In The Netherlands she graduated from the ArtScience Interfaculty department, in The Royal Academy of Art, and currently co-curates the artist initiative Platform for Thought in Motion, while she develops her work as an artist in the fields of video, performance, installation and text. She is busy with the notions of physicality, territory and time.

From the curators of the Reading Room: Thank you again to Jaime del Val, Yvonne Förster, Warrick Roseboom and Joel Ryan for their insights both in The Reading Room and in this piece! We hope to welcome you both back in Den Haag. And special thanks to all the participants that joined in this gathering.